Humanoid robots are no longer the stuff of science fiction. From walking on two legs to performing complex tasks like manipulating objects or even interacting with people, robots are edging closer to becoming a part of our everyday lives. One fascinating aspect of this advancement is teaching robots to move in ways we take for granted, such as skateboarding. The scientists are working on making humanoid robots proficient at skateboarding, and their success could have broader implications for the future of robotics and artificial intelligence (AI).

A team of researchers, led by William Thibault and Vidyasagar Rajendran at the University of Waterloo, Canada, has embarked on this exciting challenge. They’ve been using a method known as reinforcement learning (RL) to teach the REEM-C humanoid robot to perform skateboard movements in a controlled and simulated environment. If the idea of a robot mastering a skateboard seems far-fetched, the results of this study suggest that it might be more achievable than you’d think.

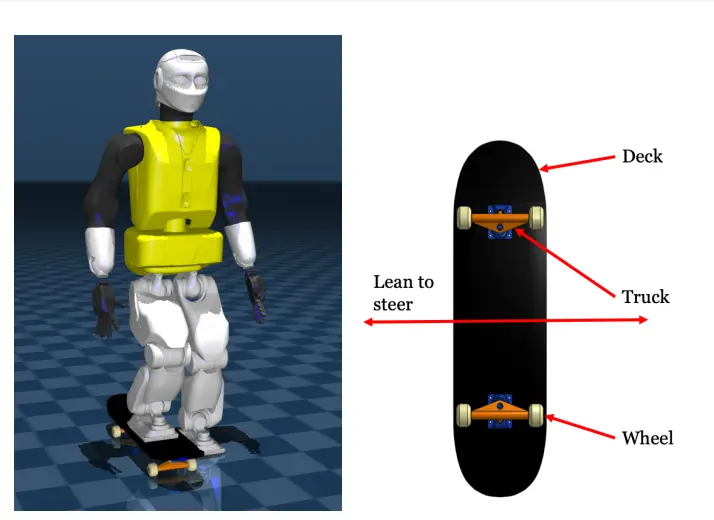

So, why skateboarding? Skateboarding represents a unique challenge for robots because it combines balance, coordination, and continuous movement—all of which are difficult for machines to handle. Unlike simple walking or running, skateboarding involves shifting weight, maintaining balance, and coordinating different parts of the body to push off the ground while staying on the board. It’s a task that requires both precision and strength, which is exactly what the researchers wanted to see their robot develop.

To achieve this, they used Brax/MJX, a powerful simulation platform that allowed them to train the robot in a massively parallel way, meaning that they could simulate thousands of skateboarding scenarios simultaneously. This made the learning process significantly faster. “We could train the robot to skateboard in hours rather than weeks thanks to the Brax engine, which allowed us to simulate multiple environments at once,” explains William Thibault, one of the lead researchers on the project.

Reinforcement learning, the approach they used, is essentially a trial-and-error method for machines. It works similarly to how humans learn new skills. The robot receives rewards for successful moves, such as maintaining balance on the skateboard, as well as penalties for unsuccessful ones. Over time, the robot acquires the ability to distinguish between beneficial and avoidable movements. This process enabled the REEM-C robot to develop a smooth, balanced motion where one foot pushed the ground while the other stayed firmly on the skateboard, exactly how a human might do it.

What makes this study particularly intriguing is the emergent behavior seen in the robot, which makes this research particularly intriguing. For example, the REEM-C robot began leaning its upper body forward during the skateboarding motion, mimicking how a human skateboarder naturally shifts their body to maintain balance. “We didn’t specifically teach the robot to lean forward,” says Vidyasagar Rajendran, another leading scientist on the team, “but it figured out that this posture helped it stay upright and stable during the push-off phase.” These emergent behaviors are a big deal because they show that the robot is not just following strict commands but is beginning to learn how to move in more fluid, human-like ways.

The results of this project could have significant implications beyond just teaching robots to skateboard. This research is a step toward making humanoid robots capable of more complex, everyday tasks in human environments. This kind of learning could be beneficial for tasks that require balance, coordination, and adaptability, such as navigating uneven surfaces, carrying objects while walking, or even assisting in areas where human labor is required. Robots that can master skateboarding may well be able to tackle more practical activities, like climbing stairs, walking on slippery floors, or even moving across rocky or uneven ground.

Moreover, the researchers aren’t stopping here. Their next goal is to transfer these skateboarding skills from the simulated environment to the real world, allowing the robot to skateboard on an actual board. They’re also investigating advanced skateboard tricks like gliding with both feet or turning with shifting weight. In essence, they are trying to teach the robot a full skateboarding routine, which could open the door to even more advanced mobility tasks.

The broader implications of this research are profound. Robots that can learn to perform dynamic tasks like skateboarding could be useful in various sectors, including healthcare, disaster relief, and even space exploration. For instance, in disaster zones where the terrain is unpredictable, robots with enhanced mobility could help deliver supplies, rescue people, or navigate dangerous areas that would be too risky for humans. Similarly, in space exploration, robots capable of navigating uneven terrain could assist astronauts in exploring the surface of planets like Mars.

This research highlights the potential for applying reinforcement learning to other complex robotic tasks, in addition to its real-world applications. The ability to simulate various scenarios quickly and efficiently using tools like Brax/MJX could accelerate the development of other skills in robots, from simple navigation to advanced manipulation of objects.

As robotics and AI continue to evolve, the ability of machines to learn and adapt will play a critical role in their integration into human environments. The skateboarding project is just one exciting example of how far we’ve come, but it’s also a hint of where we’re headed. If robots can learn to balance on a skateboard today, who knows what they’ll be capable of tomorrow? As William Thibault puts it, “Skateboarding is just the beginning. The skills the robot learns here could pave the way for much more practical applications in the future.”

With this kind of progress, it’s clear that humanoid robots are on track to become more than just a curiosity. They could soon be essential tools in many aspects of our lives. And while we may not see robots skating down the street anytime soon, the technology behind this research is moving us closer to a world where machines can move, adapt, and learn in ways we never thought possible.

Citation: Thibault, W., Rajendran, V., Melek, W., & Mombaur, K. (2024). Learning skateboarding for humanoid robots through massively parallel reinforcement learning. arXiv. https://doi.org/10.48550/arXiv.2409.07846